Origins

Algorithms that try to mimic the brain.

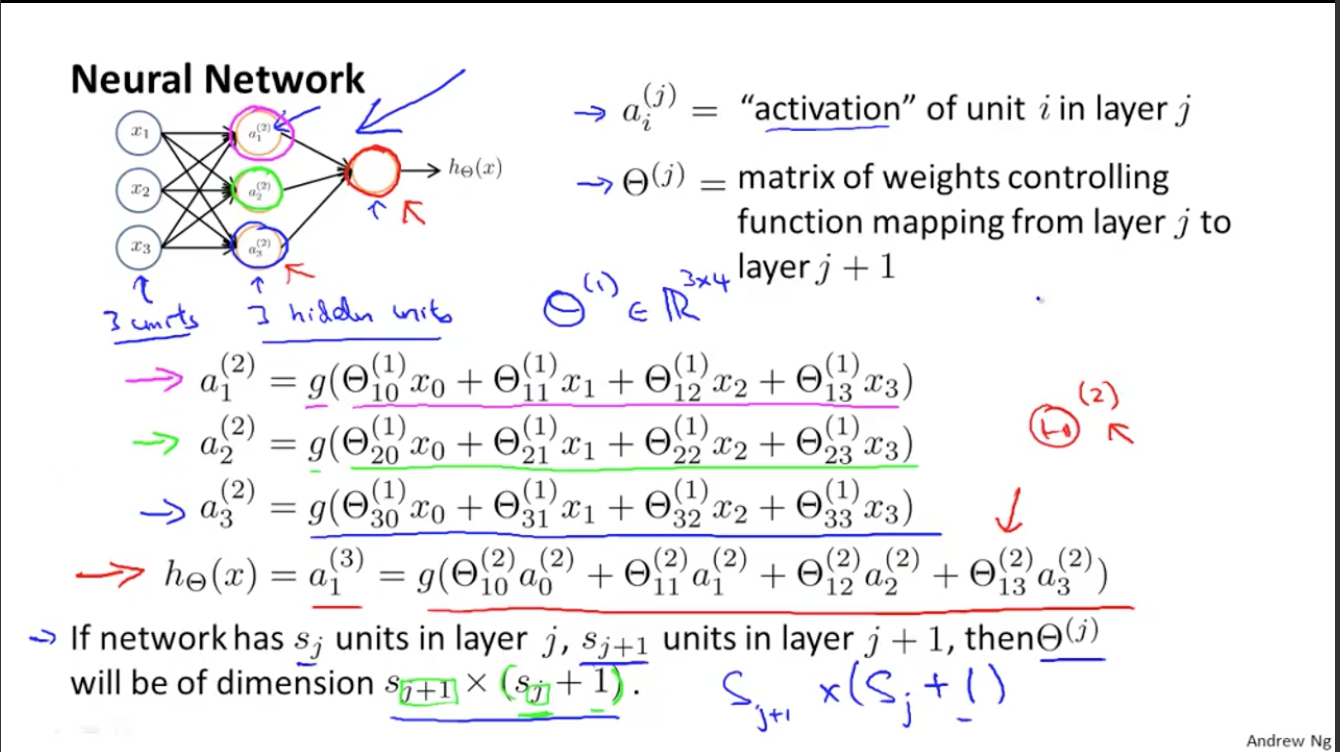

We usually call sigmoid function as activation function and $\theta$ parameters as weight(s) in neural network algorithm.

Notations

$a_i^{(j)}$ is the activation of unit $i$ in layer $j$, $\mathbf{\Theta}^{(j)}$ means the matrix of weights controlling function mapping from layer $j$ to layer $j+1$.

(Slide source: https://www.coursera.org/learn/machine-learning/)

where $g(z)$ is signoid function.

$x_1,x_1,x_3$ called input layer, $a_1^{(3)}$ called output layer, and the layers between input layer and output layer called hidden layer.

Vectorized implementation of Forward propagation

Simplify equation

for the equation in the figure above, we let

$$\Theta_{10}^{(1)}x_0+\Theta_{11}^{(1)}x_1+\Theta_{12}^{(1)}x_2+\Theta_{13}^{(1)}x_3=z_1^{(2)}$$

$$\Theta_{20}^{(1)}x_0+\Theta_{21}^{(1)}x_1+\Theta_{22}^{(1)}x_2+\Theta_{23}^{(1)}x_3=z_2^{(2)}$$

$$\Theta_{30}^{(1)}x_0+\Theta_{31}^{(1)}x_1+\Theta_{32}^{(1)}x_2+\Theta_{33}^{(1)}x_3=z_3^{(2)}$$

So the equation can be simplify to

$$a_1^{(2)}=g\left(z_1^{(2)}\right)$$

$$a_2^{(2)}=g\left(z_2^{(2)}\right)$$

$$a_3^{(2)}=g\left(z_3^{(2)}\right)$$

$$h_{\Theta}(x)=g\left(z_1^{(2)}\right)$$

Vectorize

$$\mathbf{x}=

\begin{Bmatrix}

x_0 \\

x_1 \\

x_2 \\

x_3

\end{Bmatrix}$$

$$\mathbf{z}^{(2)}=

\begin{Bmatrix}

z_1^{(2)} \\

z_2^{(2)} \\

z_3^{(2)} \\

\end{Bmatrix}

$$

$$\mathbf{z}^{(2)}=\mathbf{\Theta}^{(1)}x\tag{1}$$

$$\mathbf{a}^{(2)}=g\left(\mathbf{z}^{(2)}\right)$$

Note that $\mathbf{a}^{(2)}$ and $\mathbf{z}^{(2)}$ here where both three-dimension matrix.

If we take

$$\mathbf{a}^{(1)}=\mathbf{x}$$

$(1)$ can rewrite into

$$\mathbf{z}^{(2)}=\mathbf{\Theta}^{(1)}\mathbf{a}^{(1)}$$

Finally, by defining $z^{(3)}=\Theta_{10}^{(2)}a_0^{(2)}+\Theta_{11}^{(2)}a_1^{(2)}+\Theta_{12}^{(2)}a_2^{(2)}+\Theta_{13}^{(2)}a_3^{(2)}$ and adding $\mathbf{a_0}^{(2)}=1$ (now $\mathbf{a}^{(2)}$ is a 4-dimension matrix)to $\mathbf{a}$, we obtain

$$\mathbf{z}^{(3)}=\mathbf{\Theta}^{(2)}\mathbf{a}^{(2)}$$

The hypothesis equation will become

$$h_{\Theta}(x)=\mathbf{a}^{(3)}=g\left(\mathbf{z}^{(3)}\right)$$

Neural network learning its own features!